Bulletproof backups - How I keep my precious data safe (strategy + tools)

I want to talk about backups. We are all supposed to have backups, right? When you go to a computer repair shop and tell them your computer isn’t working, the first question that they would ask you is - do you have a backup? If you have a computer and don’t take regular backups of the whole system at least once a week, keep reading.

Even in my Computer Science department, at least once a month, I hear about a friend who lost all their data and did not have backups. Even the technically-minded are not doing the right thing. The tax return forms, the lifetime worth of photos, class papers, music and movies and what not - all gone.

When I ask people if they have backups, if they don’t say “yes”, they say something along the lines of “no, but I don’t have anything important on my computer” or “no, but my disk won’t fail”. That’s the wrong mindset. Any data is important. And all disks fail. Or someone steals your computer, and you have a paper due that night? Do you have a contingency plan?

Backup Mindset

Anything that can go wrong, will go wrong. - Murphy’s Law

I am paranoid. I always like to be prepared. Since my computer is the most important means of work and communication for me, I cannot leave it to chance if it fails.

You will lose your data (partially or fully) at least once a year. It’s the law of nature (maybe not). You will drop your computer, or spill water or coffee on it, or your OS will f*ck up. When you lose your data, you can let it be a five-minute problem, or you can let it ruin your entire day. I choose the former.

So having established that data loss is inevitable, and the importance of backups, the next question is, what is the best backup strategy?

The best strategy

There is no “best” strategy, but I will share the most widely used and trusted one with you. It’s the one I use, and have not had issues with. Here we go:

- Have at least three different backups on at least two different mediums.

- At least should be off-site (cloud) and at least one should be local (an external hard drive).

- Backups should be both continuous (think Time Machine) and archives (weekly and monthly)

That’s it. Three simple rules. What’s my personal strategy? I’m glad you asked.

Personally, I have the following setup:

-

All my data (not apps or system files) is in Google Drive. I have upgraded to 100GB plan which is enough for me. All the files I care about even slightly (which is basically all) are well-organized in Drive and get continuously synced. I get versioning out of the box. So even if my own backups fail, I can go back to Drive and recover files.

-

Most of my photos are stored in Google Photos. Some are in Google Drive. So, I don’t need exorbitant amount of disk storage for photos or videos.

-

Time Machine snapshots are taken every week on an external drive. This is an offline backup that I can use if I’m not connected to the Internet and need to recover some data. My HDD is big enough to save 7 to 8 snapshots (meaning I can go up to 2 months back to recover files). Unless I have to fresh install OS X, I rarely have to recover from this drive.

-

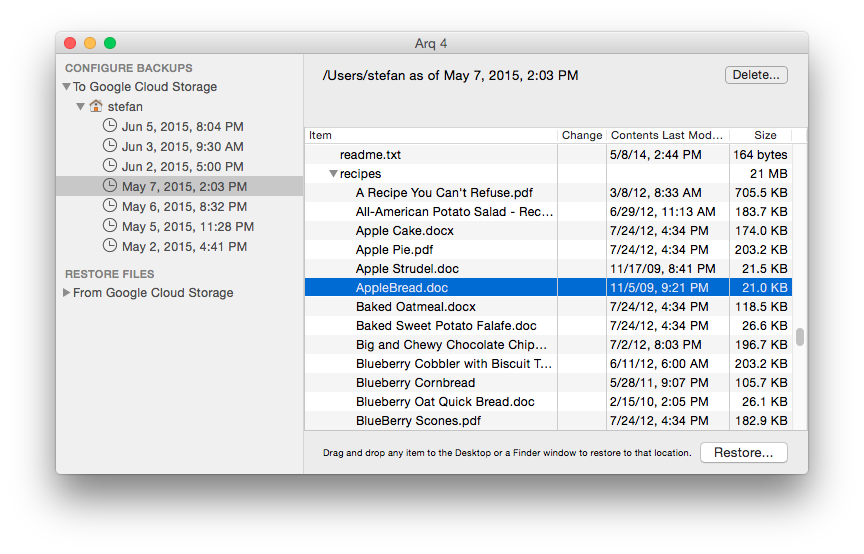

My off-site backups are done by Arq. Arq takes hourly backups for the past 24 hours, daily backups for the past week, weekly backups for the past month, and monthly backups until I hit my 200 GB budget. According to my calculations, I can store 6 months of backups in that 200 GB quota.

More on Arq

Because my primary backups are done by Arq, I thought it’s important to elaborate a bit more on that.

First, Arq is a Mac and Windows utility that costs $50 (or $80 if you want lifetime updates) and is amazing!

Second, more on my backups. I’ve setup Arq to backup to AWS S3 (East zone) and Google Cloud Storage (West zone). S3 backups happen every hour at 00 minutes and GCS backups every hour at 30 minutes. I don’t trust any single entity fully, and need some redundancy. (Sidenote - redundancy is the core of reliable computing).

Said another way, S3 can (and will) go down and lose (some of) my data. Similarly, GCS can (and will) go down and lose (some of) my data. The probability of them going down and losing my data at the same time is close to zero. Moreover, if both US West (S3) and US East (GCP) data centers were to go offline and have their storage destroyed at the same time, we have bigger problems than my data.

Everything going over network for backup purposes is encrypted with a secure password. I do not use those passwords for anything else. Encryption is critical for data integrity and security, and is a built-in feature of Arq.

Let’s talk cost

That’s a lot of backups, you must be thinking. Indeed it is. What might surprise you is that it’s not really expensive or time-consuming.

S3 backups are stored in standard storage class, and GCS is Nearline class. My estimated monthly cost for both of them (200 GB each) is between $5 and $7. I skip Starbucks twice a month to pay for my backups. True story.

All this takes almost no work on my part. Arq backups happen in the background. All I have to do is plug-in a hard drive every week on my desk. All-in, I spend about an hour a month managing my backups.

Recovery

Putting in fire alarms, and fire extinguishers only goes so far. They are useless if you don’t know how they work. Similarly, backing up is only 10% of the game. The 90% is testing restores.

Every now and then, you should test a backup restore - call it a backup drill. For cloud backups, I test restoring random files. For Time Machine, I restore to a VM. If something is wrong, I wipe the backup and start over. This is critical because:

- You’ll know exactly what to do when you need to restore. This reduces panic when you lose data.

- You want to catch corrupted backups early and clean them up. If your backup is faulty, that beats the whole point.

Conclusion

I hope this post has been helpful to you. I highly recommend you setup some sort of backup strategy for yourself and save yourself headaches.

If you are confused by anything, feel free to hit me up on Twitter. I’d be happy to answer your questions.